When ChatGPT launched in November 2022, many people got their first taste of what generative AI (GenAI) technology was capable of. GPT, which stands for “Generative Pretrained Transformer” is a large language model (LLM) created by OpenAI.

The first iteration of GPT emerged in 2018 with GPT-1, a model that was trained on more than 45 terabytes of data and used 175 billion parameters with a dataset of over 8 million documents. Subsequent versions of GPT were trained on even larger collections of data including books, websites like Wikipedia, and articles. GPT-3.0 was subsequently released in June 2020 and GPT 4, a version better at handling more complex commands than its predecessor, was officially released to the public in 2023.

The enormity of the data used to train LLMs is one of the reasons they’re good at generating compelling and impactful responses to natural language queries. But this is also the reason that LLMs trained on what is often questionable source data (e.g., Wikipedia) is prone to error, bias, and flat out making stuff up (e.g., hallucinations).

The good news for enterprises that want to use GenAI technology is that it’s possible to deploy an LLM safely by tailoring generated responses with chunks of data relevant to the prompt at hand. Delivering relevant answers is a use case that hits close to home for Coveo and one that even the largest enterprises can deploy right now.

However, you need a careful approach to content management so that your GenAI system can answer questions and solve problems in ways that are safe, secure, and relevant based on each individual user.

Safely Configuring LLMs for the Enterprise

The best way to integrate GenAI technology is to start by identifying a use case that solves an immediate problem in your organization. Often, this boils down to helping employees manage information.

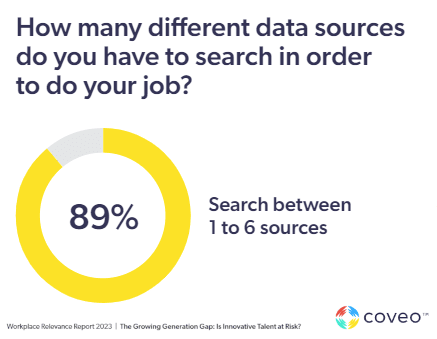

In Coveo’s 2023 Workplace Relevance Report nearly 90% of knowledge workers surveyed said they’re upset when they can’t find the correct information. This same percentage of respondents indicated they regularly search between 1-6 data sources for the right answers.

Content Governance

LLMs can help employees find the answers they need more quickly because they excel at processing, retrieving, and summarizing data. Most companies are sitting on large caches of data in many forms. Across your company, you have information stored in siloed repositories like intranets, voice and video recordings, CRMs, and email (to name a few).

This is the data that can “feed” your GenAI solution so that it generates appropriate answers. But you need to take steps to clean up the bad data (a.k.a., the “garbage”) before incorporating an LLM or a GenAI tool into your information workflow. That requires the first step of content governance.

Content governance relies on creating good practices around your organization’s content. It ensures that domain experts are involved in content management, all employees are empowered to create and share content, and that content is easily searchable — taking us to our next point.

Unified Index Layered with AI

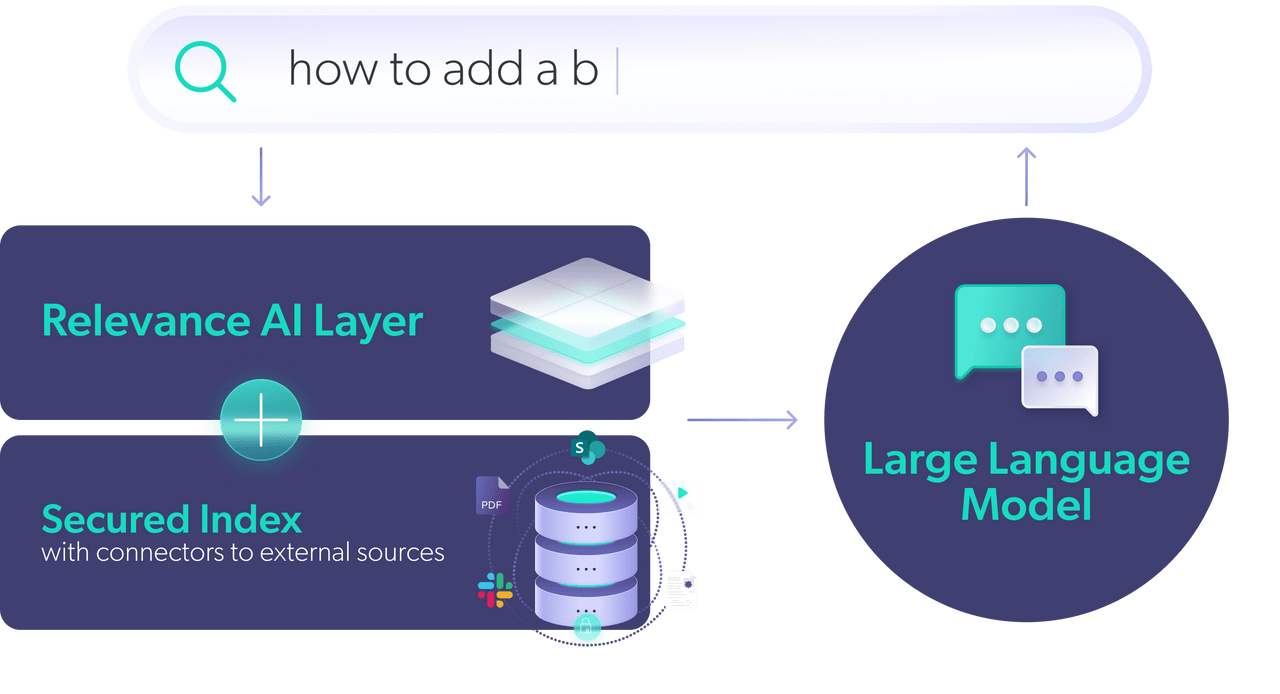

Good content governance is the beginning — once you have your clean data, you wouldn’t want to feed all of it directly to an LLM. It’s expensive, exposes your company to privacy and security risks, and heightens the chances of hallucinations. Instead, you want to feed your LLM a limited diet of small chunks of content relevant to the prompt a user has submitted.

These chunks should be pulled from a unified index that respects access control and security permissions at indexing time. Then, when a user prompts your GenAI for an answer, it doesn’t go immediately to a giant pile of content — instead, ML models select the most relevant chunks of information and feed those to the LLM. The LLM then uses those to generate a personalized response for that user.

Investing in advanced GenAI tools is important, but governance and access are equally crucial since technology can’t replace the need for subject matter expertise (SME). GenAI technology helps with things like ranking factors and information retrieval, but it’s only helpful if the content it’s using is of the highest quality.

That means leveraging a solid content management strategy focused on Knowledge Centered Service (KCS).

How KCS Benefits GenAI

KCS is a knowledge management best practice that employs tools like search analytics, content gap identification, and the appropriate structuring of data. In short, it helps you clean up your content. Garbage in, garbage out (GIGO) is a computer science concept which states that flawed, “nonsense” input data produces nonsense output. GIGO holds true for data in any form, including structured or unstructured content — especially for unstructured content.

In the context of GenAI, KCS can help improve the quality of input data by ensuring that the content sources drawn by your index on are accurate and always current. This reduces the amount of irrelevant information that is fed into the GenAI system.

By improving the quality of input data, KCS can help improve the accuracy and relevance of GenAI output.

You can start implementing a KCS strategy immediately by focusing on the documents that solve problems and answer questions today. As with any discipline, knowledge management has evolved. But the core purpose remains the same: capture, distribute, and effectively use existing knowledge, to paraphrase scholar and subject-matter expert Thomas Davenport.

Refining Content for GenAI

When it comes to both security and relevance to query or prompt at hand, there are techniques that can make your LLM much more effective. For example, retrieval-augmented generation, often referred to as RAG, is an approach that improves the quality of generated answers by coupling your LLM with a precision retrieval method.

When architecting the retrieval approach, there are several components to consider including:

- Unifying content source — Create a unified index by making disparate content sources accessible to your LLM. The unified index speeds information retrieval and offers the highest relevance.

- Managing permissions — Ensure that your index maintains user permissions associated with every document — including permissions within user groups. This is important when it comes to customizing the LLM’s response to the user, since not all users will have access to all information sources.

- Prioritizing data security & privacy — Implement robust security measures including encryption and access controls to protect sensitive information from unauthorized access. You should also ensure that any data used to feed the LLM complies with data privacy regulations and that you’ve obtained the appropriate user consent before use.

Refining your content is useful for regular search and retrieval, but it doesn’t quite achieve a conversational experience. Your index is what makes up the “relevance AI layer” of the GenAI model by connecting it with secure content sources. GenAI then enhances this search experience by connecting the (now high quality) information in your (newly unified) index to the specific user and query context.

The LLM is the final step in the process of a generative experience. It generates relevant and tailored responses using contextually relevant information – the information within your content sources and information about the person conducting the search.

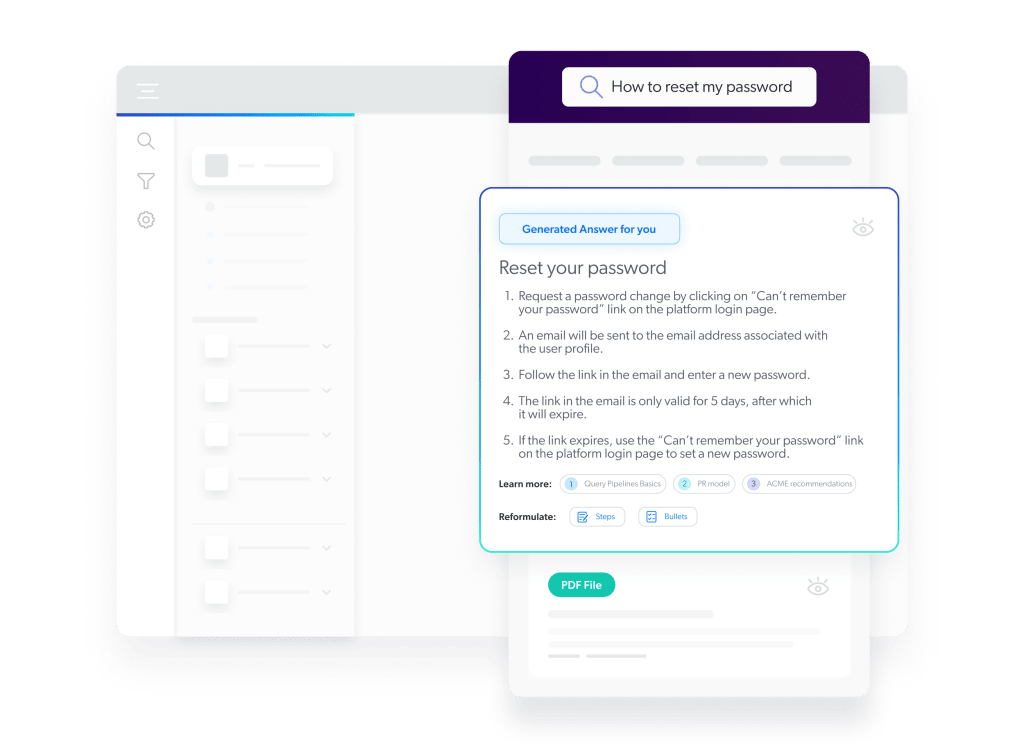

Here’s what search looks like with and without an LLM involved.

- Without an LLM: The user articulates what they think they want, then evaluates the returned links to get their answer. If the answer isn’t there, they must refine their search to try and be more accurate.

- With an LLM: An LLM takes that initial search to the next step by bringing users from exploration (search) to evaluation (conversational). Your generated answers can be grounded with answers that are contextually relevant based on the user.

Create Safe Generative Experiences

Great generative experiences are built on great content. The question is – which comes first, the content or the experience? Organizations can easily answer this question by picking an initial use case, then creating parameters around the content needed to get started.

Moving from use case to implementation requires some planning, but you don’t have to go it alone. Choosing a GenAI solution that’s enterprise ready, and one that scales as your business changes, is easier when you work with a vendor with GenAI experience – one who can architect it correctly and help you manage cost, security, and implementation.

Apologies for dragging out the food metaphor again, but it really is important to create the correct diet of information for your GenAI application.

Coveo is an Insight Engine – our platform is focused on surfacing great (e.g., “relevant”) search results using high-quality data. AI is integral to this information retrieval process. For example, our enterprise-ready Relevance Generative Answering leans on the Coveo platform to draw on chunks of information from documents that the user has permission to access.

Enterprise Tested. Trusted. Ready GenAI | Coveo Relevance Generative Answering

These relevant chunks are then sent to the LLM which generates an answer that’s relevant to the specific user. This methodology provides guardrails not only for accessibility and permissibility, but also against hallucinations. This is key. In fact, we often get asked questions about ring-fencing, or the ability to sequester certain information such as financial or HR information so it is only accessible by certain individuals.

Coveo Relevance Generative Answering uses dynamic grounding and a grounded context function. This ensures that the AI system’s output is accurate, consistent, and secure.

In the context of security, we recommend configuring your LLM to be stateless so that it doesn’t retain prompts or content and can only be used to generate natural language answers. This is crucial to maintaining governance over the LLM.

Establishing permissions across your users allows the LLM to tailor its responses to each user. By keeping permissions intact, two different users with very different roles and permissions within the same organization may see very different answers to the query, “Provide me an analysis of first quarter payroll.”

How Searchers Feed GenAI

The beauty of integrating GenAI with enterprise search is that you can continue to “feed” a proprietary system by allowing your organization to curate the generative experience. You can continuously refine and customize the presentation of the text and relevant citations that the search engine generates.

Coveo Relevance Generation Answering allows you to provide summaries, step-by-step instructions, bulleted points, and references to source documents.

You can also empower your users — the people doing the actual searches — to feed the system by having them evaluate the helpfulness of a generated answer. This is as simple as providing a thumbs up or down function and is a straightforward way to find gaps in your knowledge base.

Crafting Amazing Generative Experiences

The tools to provide your audiences — whether customers, agents, or employees — with a truly advisory experience are now available. But they need to be implemented iteratively with thought and consideration.

With more than a decade in the LLM space, Coveo offers trusted AI experts that can help you shape the experience that benefits your business best. Talk to an expert today!

Dig Deeper

We surveyed 2,000 people working with computers to learn what they really think about generative AI (GenAI). Discover how GenAI is reshaping digital experiences and creating new possibilities (and demands) for customers, employees, and enterprises.