As generative AI (GenAI) transforms the way we work, financial services firms must carefully evaluate how to integrate AI-driven capabilities to promote innovation while maintaining the security, accuracy, and compliance required in a highly regulated environment. Unlike consumer-facing AI models, which may prioritize creativity and broad, general knowledge, AI in financial services must deliver reliable, transparent, and verifiable information, minimizing the risk of hallucinations and error.

Vanguard, a leader in investment management, has been at the forefront of this AI evolution demonstrating a remarkable commitment to thoughtful and responsible innovation. With a vast knowledge ecosystem containing 14 million documents across multiple internal systems, the company has shown exceptional diligence by adopting a measured, compliance-first approach to AI.

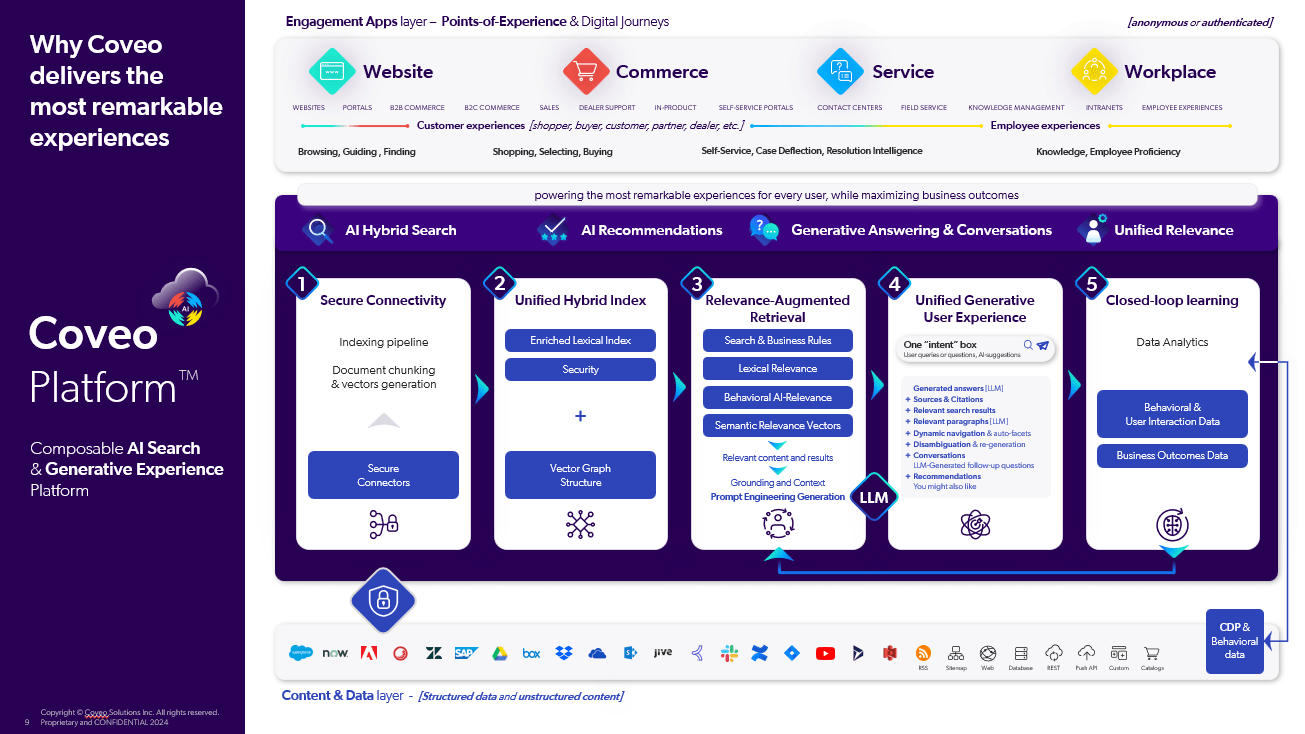

Rather than rushing GenAI into countless untested use cases, Vanguard wisely experimented with its rollout, focusing on secure, search-powered AI that ensures employees and clients receive accurate, relevant, and compliant information. Speaking at a recent Coveo employee experience roundtable, digital experience leader Laishon Gatson, Sr. Technical Product Manager at Vanguard, shared valuable insights into her team’s journey with generative AI and the Coveo AI-Relevance Platform™.

Balancing Innovation and Compliance in Financial Services

The financial services industry faces distinct challenges when implementing AI. Every interaction — whether internally for employees or externally for customers — must align with regulatory requirements, data security policies, and risk management frameworks.

Vanguard recognized early that content governance would be critical to its AI strategy. “For me, it’s still that content is king,” said Gatson, with a 25-year tenure at Vanguard and decade of search experience. “Regardless of what search engine or how much we use GenAI, it’s really based on the content that we are training these models on.”

This content-first approach ensures that AI only retrieves and generates responses from verified, up-to-date, and approved sources, reducing the risk of misinformation or regulatory breaches.

Relevant viewing: Clean Data, Smarter AI: The Impacts of KM on GenAI Effectiveness

Why AI in Finance Must Start with Search

One of the biggest misconceptions about GenAI is that it can operate effectively without high-quality data. In reality, AI is only as effective as the content it retrieves.

“Great search lays the groundwork for great AI,” said Patrick Martin, EVP of Global Customer Experience at Coveo. “Your answers are only as good as the content you feed it with.”

For Vanguard, this meant first implementing a secure, AI search foundation that unified content across multiple systems while respecting document-level permissions — before expanding into generative AI. By ensuring AI retrieved content from regulated, curated knowledge sources, the company reduced risks associated with AI-generated inaccuracies.

As a longtime champion of search and knowledge management, Gatson views search as far more than a simple box — she sees it as an immersive experience. Having already developed search and AI projects for both customers and employees, adopting GenAI felt like the natural next step for her team.

As she explains, what excites Gatson the most about search is:

“GenAI and the evolution of search: going from strictly a list of search results to now being able to give summarized answers and being a much greater tool for our users to get to the information they need quickly and efficiently.”

Vanguard’s Approach to Secure and Compliant AI

Governance and Compliance: A Non-Negotiable Priority

Vanguard’s AI adoption has been guided by their internal GenAI Steering Committee, which includes stakeholders from legal, compliance, security, and product teams. They ensure that AI implementations align with FINRA, SEC, and internal policies, reducing the risk of compliance violations.

“We have lawyers involved. We have compliance reviews,” Gatson explained. “We’re thinking about what human-in-the-loop means, what our retention policies should be, and how long we keep generated answers.”

Vanguard’s AI governance strategy includes:

- Human validation of AI-generated responses to prevent misinformation

- Strict regulatory compliance alignment to meet data retention and security mandates

- Controlled AI access to only public and approved internal data

Without these measures, AI-powered financial interactions could pose significant regulatory risks.

Relevant reading: Enterprise Safety: 5 Pillars of GenAI Security

Preventing AI Hallucinations in Financial Information

One of the critical risks in AI adoption is hallucination, where AI generates plausible but incorrect responses. In financial services, where even minor inaccuracies can lead to misinformed investment decisions or compliance issues, this risk is unacceptable.

To mitigate hallucinations, Vanguard focused on AI-augmented search, ensuring:

- AI retrieves answers only from validated internal financial documentation

- Search results prioritize compliance and accuracy over generative fluency

- Content governance filters out outdated or misleading information

This approach ensures that AI responses maintain the highest standards of accuracy, aligning with Vanguard’s commitment to financial integrity and client trust.

Relevant reading: Learn about five Generative AI risks (and their solutions) in CX & EX

Managing Enterprise Knowledge Across 14 Million Documents

With 14 million documents spread across systems like SharePoint, ServiceNow, and various internal databases, information retrieval had become a challenge. Employees needed quick, reliable access to financial policies, client service guidelines, and regulatory documentation — but scattered knowledge systems created inefficiencies.

Vanguard partnered with Coveo’s AI-Relevance Platform to solve this challenge. Rather than consolidating content into a single system, the platform acts as a central intelligence layer, enabling:

- Unified index centralizing access across multiple knowledge sources without costly data migration

- Personalized, context-driven knowledge discovery experiences tailored to each employee’s needs —only drawing on the content they’re authorized to see

- AI-powered retrieval of trusted content before generating responses

“Coveo fits well with other systems,” said Mike Marnocha, Senior Director of Product Development & AI at RightPoint. “You don’t have to migrate content into one system or worry about connectivity issues. Coveo handles that, becoming the central hub for enterprise knowledge.”

This intelligent search foundation has significantly reduced time spent searching for information, increasing employee efficiency and content discoverability.

Relevant reading: Why Quality Matters Over Quantity in Enterprise Search Connectors

Best Practices for AI-Powered Digital Transformation in Finance

Vanguard’s approach to AI adoption has followed a crawl, walk, run methodology:

- Start with internal use cases. The company first implemented AI-powered search for IT and internal knowledge bases, ensuring accuracy before expanding to customer-facing applications.

- Ensure AI has access to clean, current content. AI retrieval must be governed, secured, and continuously refined.

- Expand based on performance data. AI adoption is measured against compliance accuracy, employee feedback, and business impact metrics before broader rollout.

“Initially, we turned GenAI on for our IT professionals,” Gatson explained. “They’re early adopters, so we gathered feedback before rolling it out to 50% of employees. Only after ensuring accuracy did we expand it to all 30,000 employees.”

Relevant reading: 6 Data Cleaning Best Practices for Enterprise AI Success

Measuring AI Success in Financial Services

Vanguard evaluates AI performance using both qualitative and quantitative metrics, including:

- Employee efficiency gains: Reducing time spent searching for information

- Compliance accuracy: Ensuring AI-generated content meets regulatory standards

- User engagement and adoption: Monitoring feedback and trust levels

The company also tracks click-through rates and search effectiveness. As Gatson noted, low negative feedback can indicate AI success: “We’ve seen less than 1% negative feedback. If people hated it, we would hear it.”

The Future of AI in Financial Services

Vanguard’s outstanding pioneering approach demonstrates that AI in financial services is a transformative force for innovation when carefully governed and strategically implemented. AI is not about replacing employees — it is about augmenting expertise, reducing inefficiencies, and improving decision-making.

“We actually hear that they want it to do more,” Gatson noted, when asked about user feedback. “They want AI to handle transactional tasks so they can focus on delivering real financial advice.”

For financial firms evaluating AI, the key to success is balancing innovation with compliance. AI must deliver trusted, secure, and regulated insights —not just faster responses— empowering employees to meet stakeholder and customer needs in real time.

As financial institutions navigate the future of AI-powered knowledge discovery, Vanguard serves as an inspiring role model for the importance of a search-first, compliance-driven approach. AI can be a powerful tool, but only when built on a strong foundation of accuracy, governance, and regulatory integrity.

Watch the full webinar here:

Dig Deeper

Trust is at an all-time low for financial services institutions (FSIs) — yet there’s never been a bigger opportunity to course-correct. What’s the hold-up? This white paper delves into common misperceptions and how FSIs can adjust their perspectives to rebuild trust.