Elastic vs Coveo — how do you choose?

Once upon a time, enterprise search was a (relatively) simple proposition. A search engine would read a file, convert it into text, then index terms it found in that text in a database. If a document had an indexed term, then querying for that term would return a link to the appropriate document. If it didn’t, no link was returned.

Over the years, search became more sophisticated, kind of like going from a car with a manual transmission to one that had an automatic transmission. The manual transmission car was, for many, more fun to drive – but there was the ever-present risk of grinding (or stripping) gears.

You had to stay on top of how many revolutions per minute the engine was running at, and it required that you focus as much on shifting as on steering, which was the source of more than a few accidents. You also had to be intimately familiar with the clutch pedal, the brake pedal, and the accelerator. All those parts

For the gearhead, tinkering under the hood was art, science—and a whole lot of fun. But, when you have the means, and need to run a fleet, tinkering becomes a luxury that just isn’t practical. So it is with DIY stacks like Elastic vs SaaS-based Coveo.

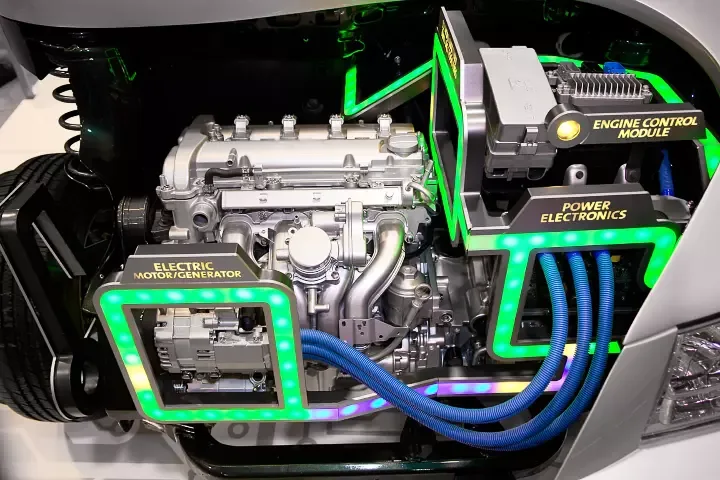

Evolution of Search from Manual to Electric

The electric vehicle still has a transmission, but that transmission is no longer powered by increasing the number of times the pistons fired but instead by how much electrical current is sent by the motors to the various wheels.

In the world of search engines, this same kind of evolution happened. Stemming, using different forms of words to find meaning, became a standard form of indexing, as did indexing two- and three-word phrases to look for lexical relationships.

Ranking algorithms became more sophisticated, determining between two documents which more closely matched the intent of the search, and making search more relevant. In general, (manual) fine-tuning search (which had been a pain point nearly from the beginning) became less and less necessary.

About half a decade ago (oddly, about the same time that electric vehicles began to be produced commercially) the whole search game changed.

In the search engine space, searching documents was replaced with searching big data stores: activity logs, JSON files, spreadsheets, and so forth. Indexing text remained important, but search engines needed to be more intelligent in identifying patterns in structured and semi-structured content, as this kind of big (albeit sparse) data became more important to managers, programmers, and data scientists alike.

The open-source Apache ElasticSearch project started down the road towards document-centric search before recognizing that the target market was changing, shifting instead towards applying search algorithms to more structured data.

As such, Elasticsearch is a great solution for processing large volumes of time-series and log-file data to identify patterns, anomalies, and obtain meaningful insights. Its large scale log analytics capabilities for observability and security is powerful, and it’s even used as a primary data store for many. This focus has helped Elastic to differentiate early on from another open source stack, Solr, which remained firmly entrenched in the document space.

However, as with Solr, ElasticSearch tended to attract the same DIY developers who wanted a stack of their own for customized data-centric search. In other words, ElasticSearch tried to bridge the transition from Manual to Automatic, making tools that could be customized (with some deep understanding of what was going on under the hood) but that failed to recognize the fact that the equivalent of electric vehicles were beginning to change the landscape altogether.

DIY Fueled by Talented Developers

Generally, open source projects tend to hit an evolutionary midpoint before plateauing. Open source is great for small and even some midsized companies looking to gain a competitive edge and have an army of lean, hungry programmers to throw at customizing a project to their needs.

However, too much of an insistence on open source can actually prove debilitating to enterprise customers. It forces those customers to become software shops themselves just to be able to maintain and customize the application, often at the expense of more monetizable activities.

Moreover, the effort to make that open-source software integrate well with existing infrastructure, both functionally and how well it fits the needs of users within the organization, could often end up costing far more than was saved in licensing costs.

For example, the Hadoop movement took an open-source project based upon a map/reduce core for processing large scale documents in parallel, and very quickly built up a complex ecosystem of Pigs, Hives, and other assorted quasi-mythical entities, spawning two largish companies in the process.

The enterprise community responded by rethinking the whole nature of cloud computing, realizing that there were more efficient ways of tackling the same problem, and transformed the Big Data space seemingly overnight … leaving Hadoop mostly stranded in its wake.

ElasticSearch and its associated stack — Kibana, Beats, and LogStash — increasingly are facing the same problem. Even as the need for a big data alternative to Solr was becoming more and more evident, there were some deep changes going on under the hood with how text processing was done, specifically in the arena of machine learning.

Easy Shift to Machine Learning

This involved taking a different approach altogether, focusing less on optimizing document ranking algorithmically, and more on identifying, modeling, and training machine-learning systems to better process such queries through an automated pipeline, an approach taken by the commercial SaaS product Coveo.

This approach is very much analogous to the switch from internal combustion engines over to electric vehicles. There are a number of benefits to machine learning-based SaaS products such as Coveo over straight computed index systems such as that taken by ElasticSearch. For starters, indexed algorithmic approaches rely primarily upon multiple indexes that are not always compatible with one another, and often tend to be computationally expensive when you are looking for better performance.

In a machine learning approach, on the other hand, the initial model creation tends to be more computationally expensive. But once set up, you can frequently get far better correlations with much less expense at runtime—making for retrieving better answers faster.

At the same time, you can also stack and filter such models far more easily than you can trying to fine-tune algorithms, which is important when you don’t have a team of data scientists at your beck and call.

This holds especially true given the increasingly powerful nature of text processing today. Natural language processing and natural language generation (NLP and NLG respectively) have improved dramatically in the last few years, mostly by automating a lot of what had been hard-coded algorithms into software such as BERT and similar Transformer-based languages.

Transformers make up the foundation of Google’s GPT-3 text analysis/synthesis system, and look to be the direction of both NLP and NLG for at least the next decade, and these are an order of magnitude more sophisticated than anything in ElasticSearch, but are a feature of Coveo.

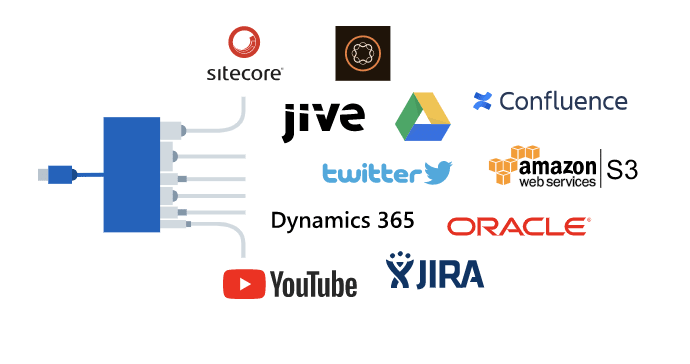

10x Support for Connectors

Another area where commercial SaaS applications shine (such as Coveo here) is in the ability to provide better support across multiple vendors in a way that can be complicated when working around open-source licenses.

Elastic only supports around a dozen very generic plugins, which means that if you are dealing with enterprise data applications, you can expect to spend a fair amount of time programming your own connectors, or being reliant upon community connectors that may be in varying stages of development and be inconsistently tested.

For example, Elastic markets its Workplace Search and App Search as a single solution called Elastic Enterprise Search. But while they’re marketed as a single solution, the two are actually quite siloed. This requires adoptees to deeply consider implementation, as some features available in one are not available in the other.

Elastic App Search requires you to upload a JSON file or ingest content via API. A web crawler is still only in beta and not under SLA. Whereas Workplace Search offers connectivity with about 16 connectors, including Salesforce—but the only default content types are contacts, leads, accounts, campaigns, and attachments.

So, if you’re going to develop your own custom connectors, you need to understand the system you are connecting to, learn that system’s API, and make sure the mapping from the original document to the indexed document is correct.

You also need to understand the system’s security model in order to extract security information from documents, and reflect that information on indexed documents. Indexing and maintaining document level security, including the security expansion has been part of Coveo since day 1. Using (open source) engines like Elastic will require more configuration (coding) and manual mapping of user identities.

Commercial applications like Coveo typically have eight to 10 times as many connectors that have the functionality above built in. They are most usually rigorously tested, meaning that you can be productive with them far more quickly than you can with open source.

Turnkey Dashboards, Administrative Tools

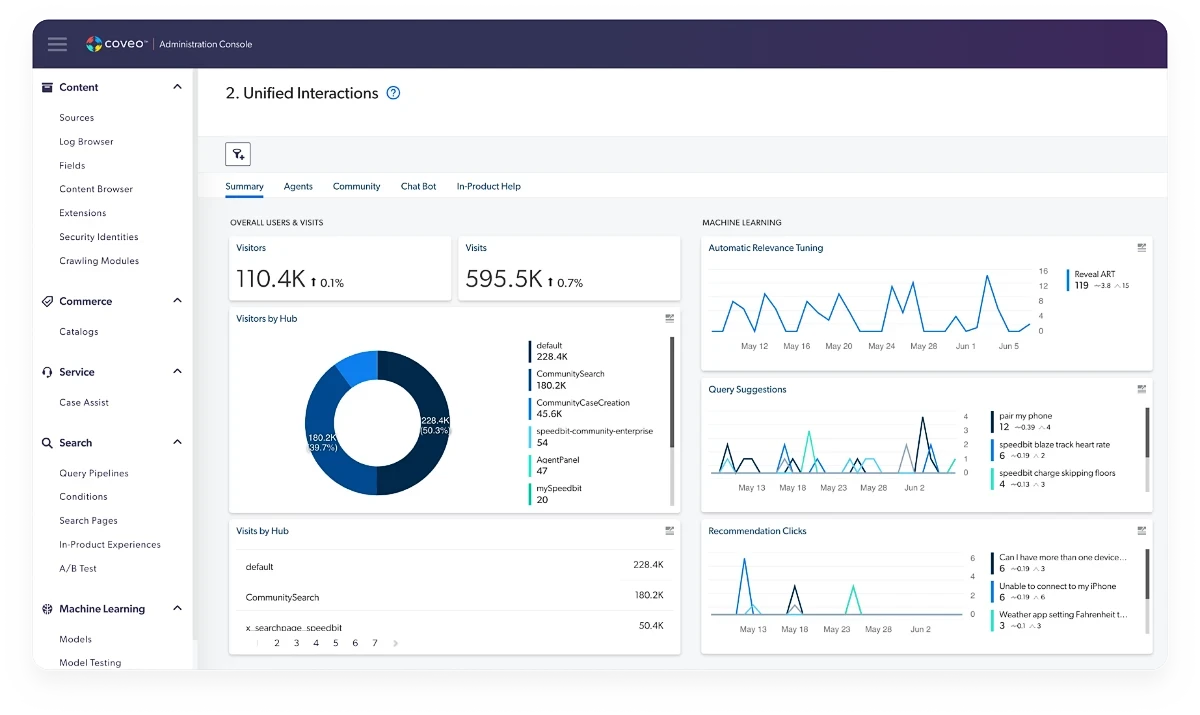

Another area where commercial applications like this make a difference is in the depth of management tools, dashboards, and intelligent UX design. This is a common failing of open source platforms—they evolve to the point where the developer can take over, and then that evolution mostly stops.

Commercial applications, on the other hand, need to be far more ready out of the box, need to be reliable, and need to have solid documentation, all places where open source tools have at best mixed track records.

This same philosophy applies to customer support. In a few cases, open source solutions have been successfully monetized through intelligent technical support. But the track record of such efforts is spotty at best. There is a regular trend of companies going to open source solutions such as Elastic — only to end up switching gears a few years in because they end up both having to train their own internal teams and having technology that fails to keep track with evolutionary trends.

Commercial solutions have their own timeline to consider. But most often these remain stable far longer, and especially for early established players such as Coveo, the incentive to stay at the cutting edge with complementary products is strong.

ElasticSearch is a good product if you’re looking at getting the equivalent of a high-maintenance internal combustion engine. But, within the domain of search, Coveo is superior not only to Elastic but also to Solr, and in the long run is cost-competitive with both.

Of course, if you want the roar of a gas-powered engine, Coveo can add that into its metaphorically electric-powered underpinnings via a good set of environmentally-friendly speakers.

Dig Deeper

Wondering what else to consider when selecting an enterprise search platform? Check out our Buyers Guide for Enterprise Search Platforms to get a tour beneath our electric car’s hood.

Or, if you want to get hands on with our AI-powered relevance platform, give Coveo a spin with free, full access for 30 days. Build a prototype within minutes!