Generative AI (GenAI) has moved from the realm of science fiction to tech teams to the highest levels of executive management.

McKinsey’s latest data reveals that over a quarter of C-suite executives are personally using GenAI for work. The topic of AI is on board agendas. It’s making its way into budgets, and according to McKinsey, 40% are planning to increase investment in AI thanks, specifically, to advancements in GenAI.

From where we’re standing, GenAI has a bright — practically blinding — future, but it’s also not without risks. GenAI in its nascent form presents a host of obstacles including inaccuracy (famously referred to as “hallucinations”). It makes stuff up, sometimes with spectacularly bad consequences. To avoid this, it’s important to ground any large language model (LLM) you use with accurate information. LLM is technology that GenAI is powered by.

The grounding LLM process involves connecting your AI model to real-world data and knowledge to make them more accurate and relevant for specific use cases.

As we enter the next phase of GenAI adoption, the question is not, “will businesses embrace and leverage GenAI,” but “how can enterprises ground it in reality?”

Retrieval Augmented Generation Grounds LLMs in Enterprise Knowledge

Retrieval augmented generation (RAG) is a powerful AI framework that helps improve the quality of LLM output by allowing a system to access information beyond its static training data. It pulls content from trusted data sources like your intranet, CRM platform, and product database then uses GenAI to pull this content into its response to the user.

Here’s a summary of how it works in the context of site search:

- A user asks a question or types a query into the search field of your website

- The system identifies the most contextually relevant content chunks across your knowledge base (a process called “chunking”)

- The system uses these content chunks to get additional context and current facts, which inform the LLM’s response

- The GenAI system generates a relevant, grounded answer from the content chunks, citing the specific data sources used.

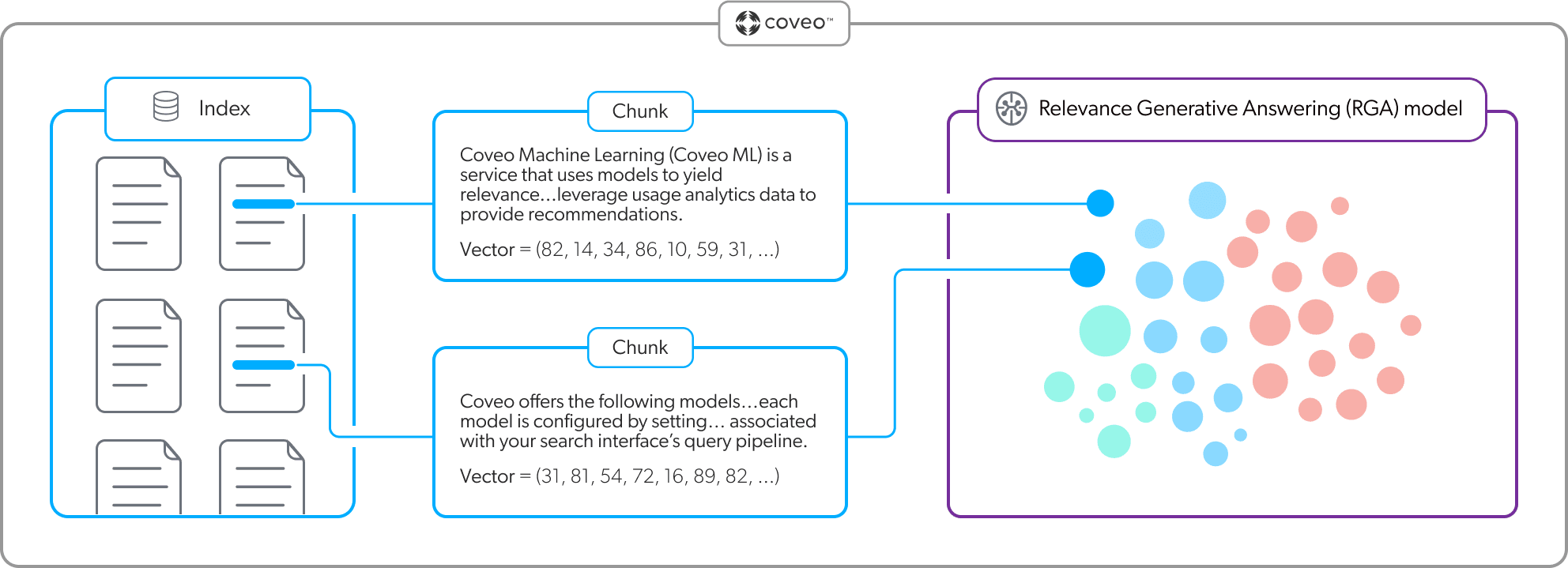

Coveo uses the RAG framework to power our Relevance Generative Answering (RGA) model for site search. The model creates embeddings of your approved content, a machine learning process that converts text data into vectors (numerical representations). In RGA, these embeddings enable the search engine to generate highly relevant answers to user queries, as illustrated below:

A key advantage of the RAG framework is its ability to significantly reduce instances of hallucinations. RAG also enhances the relevance of search results by delivering the most timely and appropriate information for each user’s query. Since it relies on vetted internal content to produce a response, RAG also keeps sensitive company information secure.

The Challenges of GenAI for Enterprises

CIOs are under pressure to deploy GenAI quickly and effectively, but this technology is not without some significant challenges. Ignoring them is not an option. Before you can pinpoint and invest in an enterprise-level use case for GenAI, it’s important to understand the risks which include:

Security

Public GenAI tools expose businesses to new security risks because they don’t have built-in security layers for enterprises. In our recent GenAI Report, we found that 71% of senior IT leaders believe GenAI could introduce new security risks in their organization.

If enterprises don’t have a secure index that considers permissions and roles, generated answers can come back with content users shouldn’t see. This is where grounding LLMs with techniques like retrieval augmented generation can help, because it relies on an enterprise’s own secure, vetted content.

Content

Public GenAI platforms have parsed the internet. But, they haven’t parsed the unique data that your organization stores in popular SaaS platforms like Salesforce, SAP, Sharepoint, or Dynamic 365 — and the power of a large language model increases with access to more content. If you want GenAI technology to provide answers about your enterprise’s specific products, services, and organization, that information needs to be indexed, securely.

An LLM, and the AI system it powers, is only as good as the content from which it draws. For example, it’s like the difference between researching a topic from a single book versus digging into an entire library. But many companies are ill-prepared to make use of GenAI as their content is spread throughout multiple, siloed repositories. This impacts the timeliness and relevance of the content GenAI has access to. Leveraging a unified index with RAG layered in addresses this by enabling LLMs to access current, relevant information from an enterprise’s own trusted data sources.

Building connectors to index every complex system in a unified way is difficult, time-consuming, and expensive.

Accuracy

GenAI solutions can confidently produce factual errors that appear accurate, a phenomenon called “hallucinations.”

For businesses, these inaccuracies, biases, or misleading information can have significant consequences including an erosion in customer trust and opening businesses up to ethical and legal ramifications. Whether they are answering to healthcare professionals or government bodies, companies need compliance and ways to verify the accuracy of GenAI and the specific information it produces.

Grounding LLMs with RAG significantly reduces instances of hallucinations by ensuring that gen AI responses are based on factual, up-to-date information.

Consistency

Search and GenAI need to work together to deliver consistent experiences across all channels. If an enterprise provides both chat and search experiences, customers (and employees) should not receive different answers in these channels. This is crucial to offering great experiences. As we noted above, Coveo’s Relevance Generative Answering model leverages RAG to generate highly pertinent responses to user queries in search, ensuring consistency across touchpoints.

Why implement a new technology without first making sure the conversation you’re having with your customer is consistent?

Cost

Solving all of the above is complicated. Organizations seeking alternatives to public GenAIs will need a platform that handles all of the aforementioned challenges. But do you build it yourself — or buy it?

There are several issues with building an infrastructure that contains necessary security features and role-based access permissions; an intelligence layer that can disambiguate and understand user intent; native connectors into popular organizational platforms; and an API layer that works with any front-end. The expertise and experimentation necessary to build this at scale can take years, impacting both time to market — and having the necessary resources available to innovate.

You may wonder if there’s a use case for GenAI that’s truly enterprise ready. The answer is yes, but it requires that you adopt a grounded LLM model like RAG to use as a foundation model for your GenAI system.

Choosing a Grounded GenAI Tool

We feel grounded when we’re standing on something solid and dependable – when we can count on the laws of gravity and physics to keep us from drifting away. Think of GenAI grounding in similar terms. It’s about choosing a GenAI tool grounded in facts and high-quality content.

In your business, you shape reality for different audiences including your employees, customers, shareholders, and partners. This reality is built on the foundation of good content. To achieve accurate grounding, you need to follow a few best practices which include:

- Creating a comprehensive knowledge management strategy

- Having an up-to-date searchable and unified index of your content

- Supporting user permissions across different enterprise systems

- Using AI technology like a RAG system that can retrieve relevant information in real time

- Establishing a concrete use case like AI-powered generative search for any genAI model you plan to deploy.

Relevance, in the context of information retrieval, is an integral part of enterprise search technology. Relevance matches each user query with the correct access and further pairs this response with industry-leading question answering and semantic search capabilities.

AI comes into play with machine learning (ML)-powered ranking and personalization algorithms that help identify the most appropriate content which is fed to the LLM as “grounding context”.

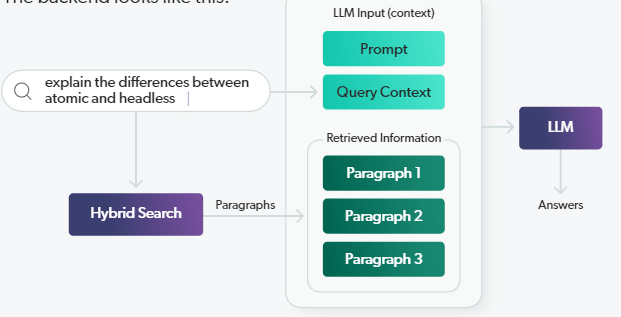

The LLM identifies chunks of content that’s most relevant to the user and the query. Rather than sending full-length documents to the user, the grounding context (e.g., small amounts of information gleaned from larger documents) is limited in size. Retrieving information in small chunks versus sending entire documents is important because it ensures that you won’t exceed an LLM’s context window sizes.

We’ve already touched on chunking as the best way to ground your LLM. To implement this approach effectively, you should create a chunking strategy which helps keep costs manageable as you scale your GenAI system.

Creating a “Chunking” Strategy

So now you know that creating a comprehensive document chunking strategy is foundational for deploying GenAI at the enterprise level. It’s what allows you to balance the two components needed for GenAI-assisted information retrieval including:

- Using grounding context, retrieve relevant content based on a user’s query within the limits of the LLM’s processing capabilities.

- Ensuring that document chunks are appropriately tied to semantic search – that is, they should make sense to the searcher even though they’re smaller pieces of larger documents.

But, equally important, is a focus on correct prompt engineering. Prompt engineering is the process of creating and optimizing the cues that get GenAI to generate the most appropriate answers. Developers employ prompt engineering when building LLMs to make them more effective and less prone to errors.

Prompt engineering involves collecting some sample data to create an evaluation dataset, building prompts into the system, then using an evaluation dataset so that users can test them.

Selecting the right words, expressions, and symbols is important because getting it wrong means GenAI might just serve up a plate of gibberish. Several key considerations underpin a good prompt engineering approach, and these include:

- Accounting for information deficits – Configure your model so that it can’t respond to queries if it doesn’t have the right information available. This prevents hallucinations and ensures output is grounded in real information.

- Sourcing all responses – Supply citations or context with the generated response which supports the generated answers. This shows users that responses are reliable and credible. It also allows people to take a deeper dive if they want to explore a topic more thoroughly.

- Using appropriate language – Use language that’s appropriate in the sense that it’s contextually relevant, professional, and tied to your specific industry and use case. You should also set boundaries that prevent the model from answering questions about offensive or inappropriate content.

- Being consistent and clear – Prompts should be articulated clearly, adhering to proper grammar and spelling conventions. They should also maintain a consistent format across all engineers within an organization, ensuring uniformity and ease of understanding.

- Conducting testing – Implementing functional testing is the only way to know if the model works and how various changes impact GenAI output. The goal of testing is also to monitor model reliability, consistency, and accuracy as prompts are revised and input data is updated.

From an enterprise-ready perspective, the importance of prompt engineering for GenAI can’t be understated. Poorly configured prompts can lead to irrelevant outputs and introduce bias, security breaches, and expose sensitive data to unauthorized users.

The Future of GenAI for Enterprises

In a solidly post ChatGPT world, grounding LLM to create an enterprise-ready GenAI solution is top-of-mind for executives, tech leaders, board members, and employees across all types of organizations.

As the field of computer science continues to advance, the potential for AI to understand and generate human-like natural language has become a reality. However, with this power comes the responsibility to ensure that these language models are grounded in factual, up-to-date information.

Innovation is what puts your company at the forefront of the competition — but you have to act responsibly. Dynamic grounding techniques like RAG and RGA let you do that. They’re the key to unlocking the full potential of GenAI in the enterprise while ensuring the technology remains a tool for innovation and growth.

Learn more about the different challenges that Coveo Relevance Generative Answering can help you solve — and get questions answered in real time by a search expert by requesting a demo!